NVIDIA A100 PCIe 80GB GPU

Highest versatility for all workloads

- Released June 28th, 2021

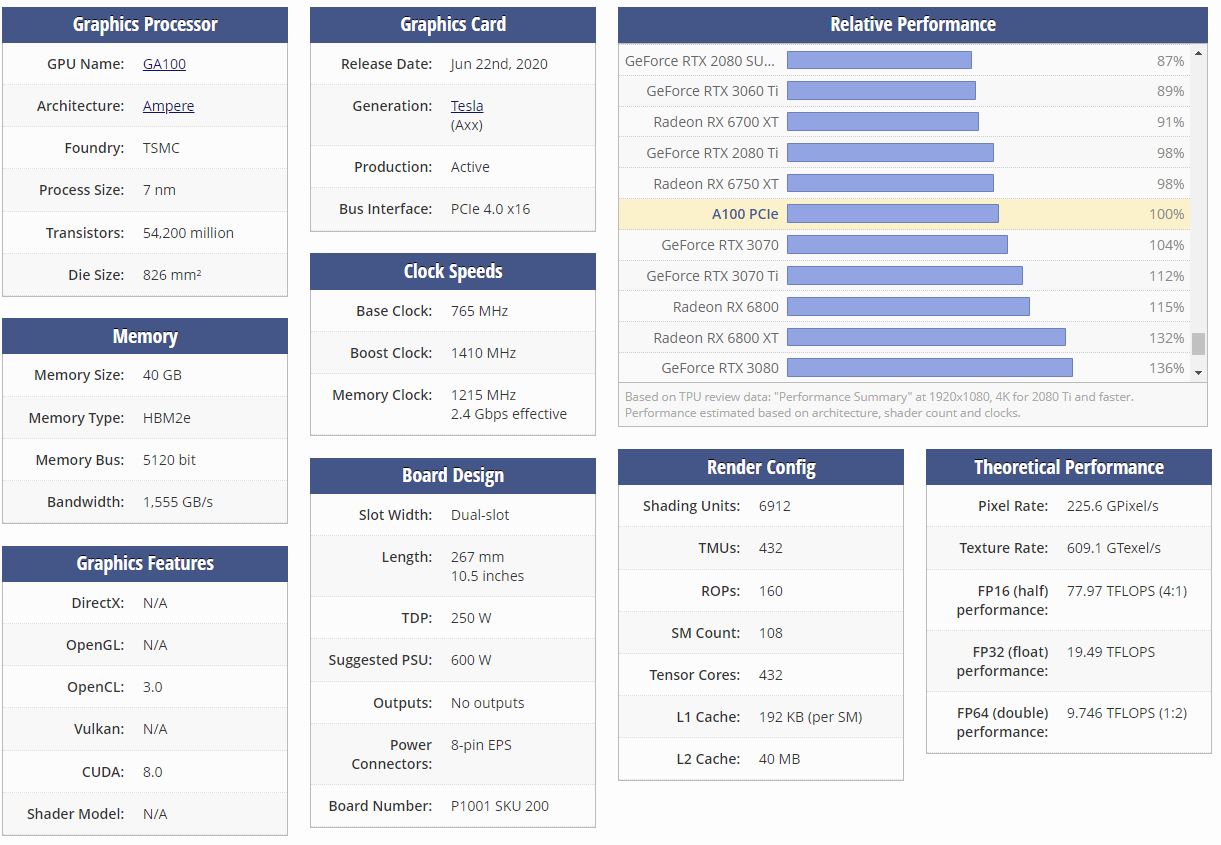

- GA100 Graphic Processor

- 6912 Cores

- 300W TDP

- 432 TMUS

- 160 ROPS

- 80GB Memory Size

- HBM2e Memory Type

- 5120 bit BUS Width

-

$USD $19,222.00

*RRP Pricing*To View Channel Discounts Please Login

Unprecedented Acceleration for World’s Highest-Performing Elastic Data Centers

The NVIDIA A100 80GB Tensor Core GPU delivers unprecedented acceleration—at every scale—to power the world’s highest performing elastic data centers for AI, data analytics, and high-performance computing (HPC) applications. As the engine of the NVIDIA data center platform, A100 provides up to 20x higher performance over the prior NVIDIA Volta generation. A100 can efficiently scale up or be partitioned into seven isolated GPU instances, with Multi-Instance GPU (MIG) providing a unified platform that enables elastic data centers to dynamically adjust to shifting workload demands.

A100 is part of the complete NVIDIA data center solution that incorporates building blocks across hardware, networking, software, libraries, and optimized AI models and applications from NGC. Representing the most powerful end-to-end AI and HPC platform for data centers, it allows researchers to deliver real-world results and deploy solutions into production at scale, while allowing IT to optimize the utilization of every available A100 GPU.

The A100 PCIe 80 GB is a professional graphics card by NVIDIA, launched on June 28th, 2021. Built on the 7 nm process, and based on the GA100 graphics processor, the card does not support DirectX. Since A100 PCIe 80 GB does not support DirectX 11 or DirectX 12, it might not be able to run all the latest games. The GA100 graphics processor is a large chip with a die area of 826 mm² and 54,200 million transistors. It features 6912 shading units, 432 texture mapping units, and 160 ROPs. Also included are 432 tensor cores which help improve the speed of machine learning applications. NVIDIA has paired 80 GB HBM2e memory with the A100 PCIe 80 GB, which are connected using a 5120-bit memory interface. The GPU is operating at a frequency of 1065 MHz, which can be boosted up to 1410 MHz, memory is running at 1593 MHz.

Being a dual-slot card, the NVIDIA A100 PCIe 80 GB draws power from an 8-pin EPS power connector, with power draw rated at 250 W maximum. This device has no display connectivity, as it is not designed to have monitors connected to it. A100 PCIe 80 GB is connected to the rest of the system using a PCI-Express 4.0 x16 interface. The card measures 267 mm in length, and features a dual-slot cooling solution.

Start configuring your GP-GPU Server now!

The core of AI and HPC in the modern data center

Scientists, researchers, and engineers-the da Vincis and Einsteins of our time-are working to solve the world’s most important scientific, industrial, and big data challenges with AI and high-performance computing (HPC). Meanwhile businesses and even entire industries seek to harness the power of AI to extract new insights from massive data sets, both on-premises and in the cloud. The NVIDIA Ampere architecture, designed for the age of elastic computing, delivers the next giant leap by providing unmatched acceleration at every scale, enabling these innovators to do their life’s work.

Start configuring your GP-GPU Server now!

| A100 80GB PCIe | A100 80GB SXM | |||

|---|---|---|---|---|

| FP64 | 9.7 TFLOPS | |||

| FP64 Tensor Core | 19.5 TFLOPS | |||

| FP32 | 19.5 TFLOPS | |||

| Tensor Float 32 (TF32) | 156 TFLOPS | 312 TFLOPS* | |||

| BFLOAT16 Tensor Core | 312 TFLOPS | 624 TFLOPS* | |||

| FP16 Tensor Core | 312 TFLOPS | 624 TFLOPS* | |||

| INT8 Tensor Core | 624 TOPS | 1248 TOPS* | |||

| GPU Memory | 80GB HBM2e | 80GB HBM2e | ||

| GPU Memory Bandwidth | 1,935 GB/s | 2,039 GB/s | ||

| Max Thermal Design Power (TDP) | 300W | 400W *** | ||

| Multi-Instance GPU | Up to 7 MIGs @ 10GB | Up to 7 MIGs @ 10GB | ||

| Form Factor | PCIe Dual-slot air-cooled or single-slot liquid-cooled | SXM | ||

| Interconnect | NVIDIA® NVLink® Bridge for 2 GPUs: 600 GB/s ** PCIe Gen4: 64 GB/s | NVLink: 600 GB/s PCIe Gen4: 64 GB/s | ||

| Server Options | Partner and NVIDIA-Certified Systems™ with 1-8 GPUs | NVIDIA HGX™ A100-Partner and NVIDIA-Certified Systems with 4,8, or 16 GPUs NVIDIA DGX™ A100 with 8 GPUs | ||

| Title | Version | Date | Size | |

|---|---|---|---|---|

| A100 80GB for PCIe | 1 | 01-07-2021 | 389KB |  |

Tags: NVIDIA, GP, GPU, A100, 80GB, PCIe, Ampere, HPC, AI, Deep Learning, TESLA, 300W,

AUS site

AUS site

.jpg)

.jpg)