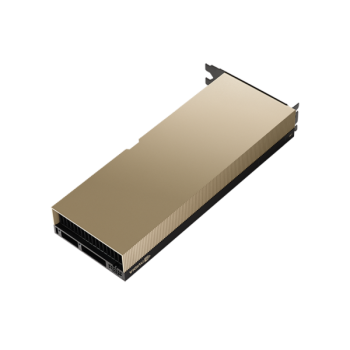

NVIDIA L40S GPU

- Launched October 13th, 2022

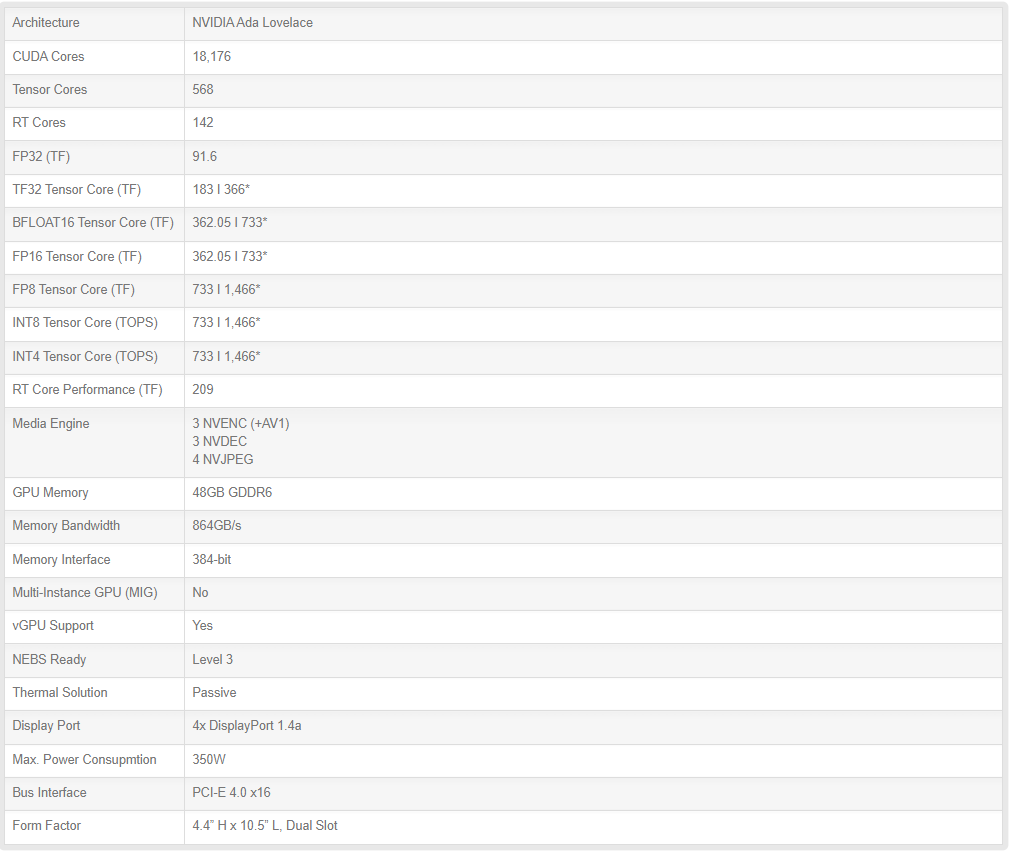

- NVIDIA Ada Lovelace GPU architecture

- 48GB GDDR6 memory with ECC

- 18,176 CUDA Cores

- 142 RT Cores

- 568 Tensor Cores

- Max. Power Consumption: 350W

- Interconnect Bus: PCIe 4.0 x16

- Thermal Solution: Passive

- Form Factor: Full-height full-length, 2-slot

- vGPU Software Support

- Secure Boot with Root of Trust

- NEBS Ready

-

$USD $11,950.00

*RRP Pricing*To View Channel Discounts Please Login

NVIDIA L40S GPU

The Most Powerful Universal GPU

Experience breakthrough multi-workload performance with the NVIDIA L40S GPU. Combining powerful AI compute with best-in-class graphics and media acceleration, the L40S GPU is built to power the next generation of data center workloads—from generative AI and large language model (LLM) inference and training to 3D graphics, rendering, and video.

Start configuring your GP-GPU Server now!

The Most Powerful Universal GPU

Powered by the NVIDIA Ada Lovelace Architecture

NVIDIA Omniverse

Create and operate metaverse applications

NVIDIA Omniverse™ makes it possible to connect, develop, and operate the next wave of industrial digitalization applications. With powerful RTX graphics and AI capabilities, L40S delivers exceptional performance for Universal Scene Description (OpenUSD)-based 3D and simulation workflows built on Omniverse.

Third-Generation RT Cores

Enhanced throughput and concurrent ray-tracing and shading capabilities improve ray-tracing performance, accelerating renders for product design and architecture, engineering, and construction workflows. See lifelike designs in action with hardware-accelerated motion blur to deliver stunning real-time animations.

Fourth-Generation Tensor Cores

Hardware support for structural sparsity and optimized TF32 format provides out-of-the-box performance gains for faster AI and data science model training. Accelerate AI-enhanced graphics capabilities, including DLSS, delivering upscaled resolution with better performance in select applications.

CUDA

Tackle memory-intensive applications and workloads like data science, simulation, 3D modeling, and rendering with 48GB of ultra-fast GDDR6 memory. Allocate memory to multiple users with vGPU software to distribute large workloads among creative, data science, and design teams.

Transformer Engine

Transformer Engine dramatically accelerates AI performance and improves memory utilization for both training and inference. Harnessing the power of the Ada Lovelace fourth-generation Tensor Cores, Transformer Engine intelligently scans the layers of transformer architecture neural networks and automatically recasts between FP8 and FP16 precisions to deliver faster AI performance and accelerate training and inference.

Efficiency and Security

L40S GPU is optimized for 24/7 enterprise data center operations and designed, built, tested, and supported by NVIDIA to ensure maximum performance, durability, and uptime. The L40S GPU meets the latest data center standards, are Network Equipment-Building System (NEBS) Level 3 ready, and features secure boot with root of trust technology, providing an additional layer of security for data centers.

DLSS 3

L40S GPU enables ultra-fast rendering and smoother frame rates with NVIDIA DLSS 3. This breakthrough frame-generation technology leverages deep learning and the latest hardware innovations within the Ada Lovelace architecture and the L40S GPU, including fourth-generation Tensor Cores and an Optical Flow Accelerator, to boost rendering performance, deliver higher frames per second (FPS), and significantly improve latency.

Start configuring your GP-GPU Server now!

| Title | Version | Date | Size | |

|---|---|---|---|---|

| L40S Data Sheet |  |

Tags: NVIDIA, L40S, GP, GPU, ADA Lovelace architecture, AI, Omniverse Enterprise, Rendering, 3D Graphics, Data Science. Virtual Workstations

AUS site

AUS site

.jpg)

.jpg)