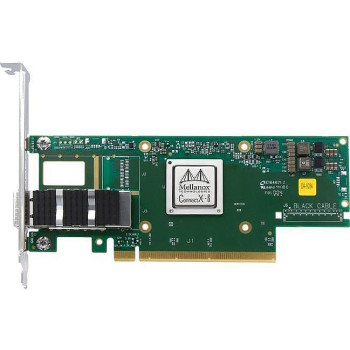

HHHL 100Gb QSFP56 1 port NIVIDA MELLANOX MCX653105A-ECAT ConnectX-6 PCI-E X16 Gen 4

- Up to HDR100 EDR InfiniBand and 100GbE Ethernet connectivity per port

- Max bandwidth of 200Gb/s

- Up to 215 million messages/sec

- Sub 0.6usec latency

- Block-level XTS-AES mode hardware encryption

- FIPS capable

- Advanced storage capabilities including block-level encryption and checksum offloads

- Supports both 50G SerDes (PAM4) and 25 SerDes (NRZ)-based ports

- Best-in-class packing with nsubnanosecond accuracy

- PCIe Gen3 and PCIe Gen4 support

- RoHS-compliant

- ODCC-compatible

-

$USD $954.00

*RRP Pricing*To View Channel Discounts Please Login

Mellanox ConnectX®-6 VPI Adapter

Single-Port Adapter Card supporting 200Gb/s with Virtual Protocol Interconnect VPI

ConnectX-Virtual Protocol Interconnect® (VPI) is a groundbreaking addition to the Mellanox ConnectX series of industry-leading adapter cards. Providing up to two ports of 200Gb/s for InfiniBand and Ethernet connectivity, sub-600ns latency and 215 million messages per second, ConnectX-6 VPI enables the highest performance and most flexible solution aimed at meeting the continually growing demands of data center applications.

In addition to all the existing innovative features of past versions, ConnectX-6 offers a number of enhancements to further improve performance and scalability.

ConnectX-6 VPI supports up to HDR, HDR100, EDR, FDR, QDR, DDR and SDR InfiniBand speeds as well as up to 200, 100, 50, 40, 25, and 10Gb/s Ethernet speeds.

Benefits

- Industry-leading throughput, low CPU utilization and high message rate

- Highest performance and most intelligent fabric for compute and storage infrastructures

- Cutting-edge performance in virtualized networks including Network Function Virtualization (NFV)

- Mellanox Host Chaining technology for economical rack design

- Smart interconnect for x86, Power, Arm, GPU and FPGA-based compute and storage platforms

- Flexible programmable pipeline for new network flows

- Cutting-edge performance in virtualized networks, e.g., NFV

- Efficient service chaining enablement

- Increased I/O consolidation efficiencies, reducing data center costs & complexity

Notes: a. The ConnectX-6 adapters supplement the IBTA auto-negotiation specification to get better bit error rates and longer cable reaches. This supplemental feature only initiates when connected to another NVIDIA InfiniBand product.

b. Typical power for ATIS traffic load.

c. The non-operational storage temperature specifications apply to the product without its package.

| Title | Version | Date | Size | |

|---|---|---|---|---|

| Product Catalog |  |

Tags: HHHL, 100Gb, QSFP56, 1 port, NVIDIA, MELLANOX, MCX653105A-ECAT, ConnectX-6, PCI-E X16, Gen 4, InfiniBand, Ethernet Card

Featured Products

Check out more of our hot products

S8MT MI325X | D75T-7U

S8MT MI325X - HPC/AI Server - AMD EPYC™ 9005 Turin / 9004 Genoa CPU- AMD Instinct™ MI325X 8-G..

$63,400.00

AHG H200/RTX PRO 6000 Blackwell | ESC8000A-E13

AHG - AMD EPYC™ 9005 series Turin CPU - NVIDIA H200 / NVIDIA RTX PRO™ 6000 Blackwell S..

$18,200.00

GX5 B200| G893-SD1-AAX5

GX5 | G893-SD1-AAX5 HPC/AI Server - 5th/4th Gen Intel® Xeon® Scalable - 8U DP NVIDIA HGX™ B20..

$54,600.00

R7E | RS501A-E12

R7E | RS501A-E12 AMD EPYC™ 9005/9004 single-processor 1U server that supports up to 24 DIMM, 12..

$2,923.00

GZ8 MI325X | G893-ZX1

GZ8 MI325X - HPC/AI Server - AMD EPYC™ 9005 Turin / 9004 Genoa - DP AMD Instinct™ MI325X 8-GPU-..

$63,000.00

GS3 H200 | G593-SD1-AAX3

GS3 | G593-SD1-AAX3 HPC/AI Server - 5th/4th Gen Intel® Xeon® Scalable - 5U DP NVIDIA HGX™ H20..

$41,200.00

WS2 Workstation | B5652F65TV6E2H-2T-N

1S Deskside AI server supporting multiple GPU cards- HPC/AI Server- 5th and 4th Gen Intel® Xeon® Sca..

$3,500.00

AUS site

AUS site